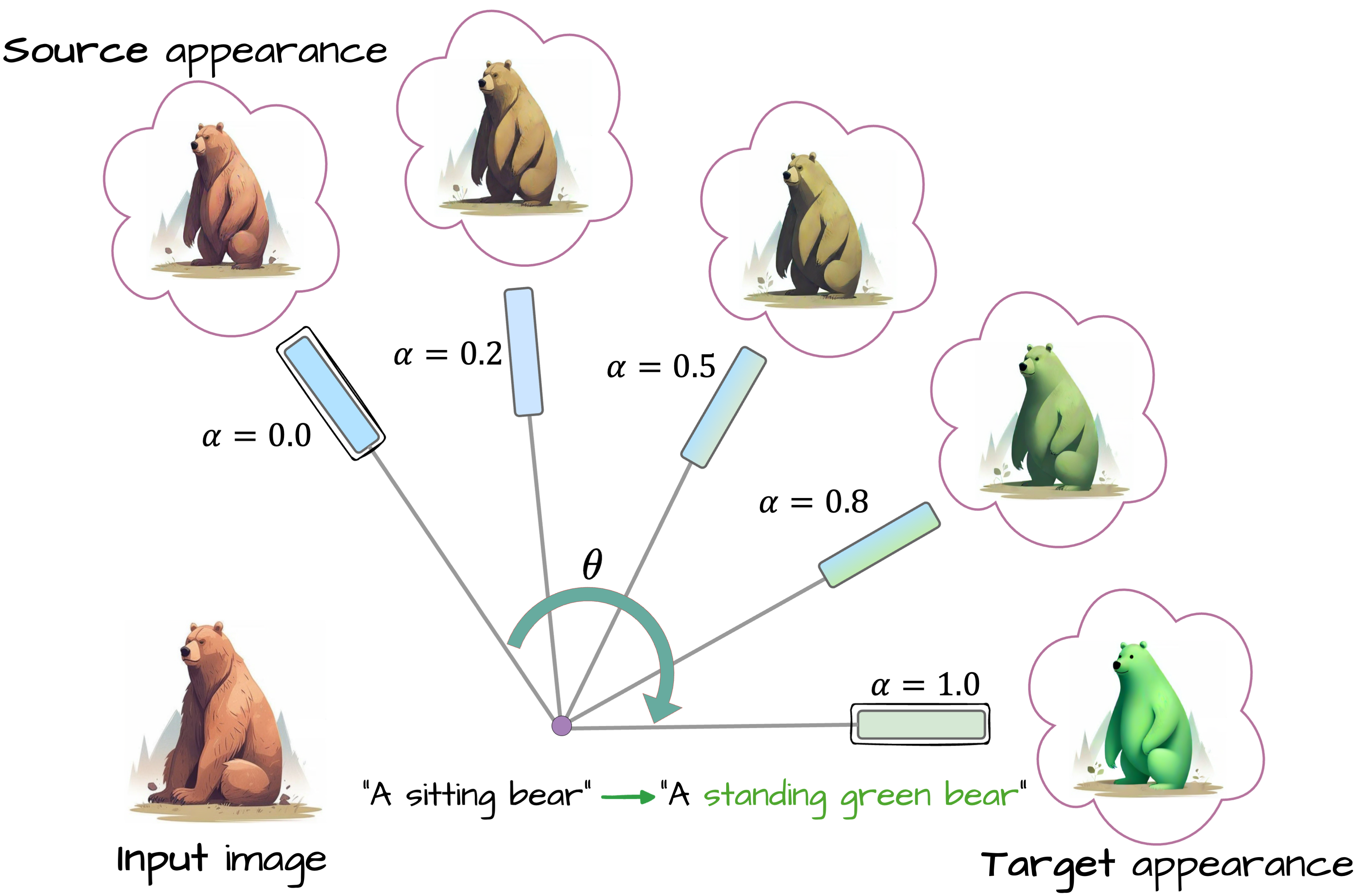

Click a thumbnail, then adjust α (Appearance alignment) and β (Structure alignment) from 0 (complete alignment with input image) to 1 (complete adherence to text prompt) to see the output.

|

|

|

|

|

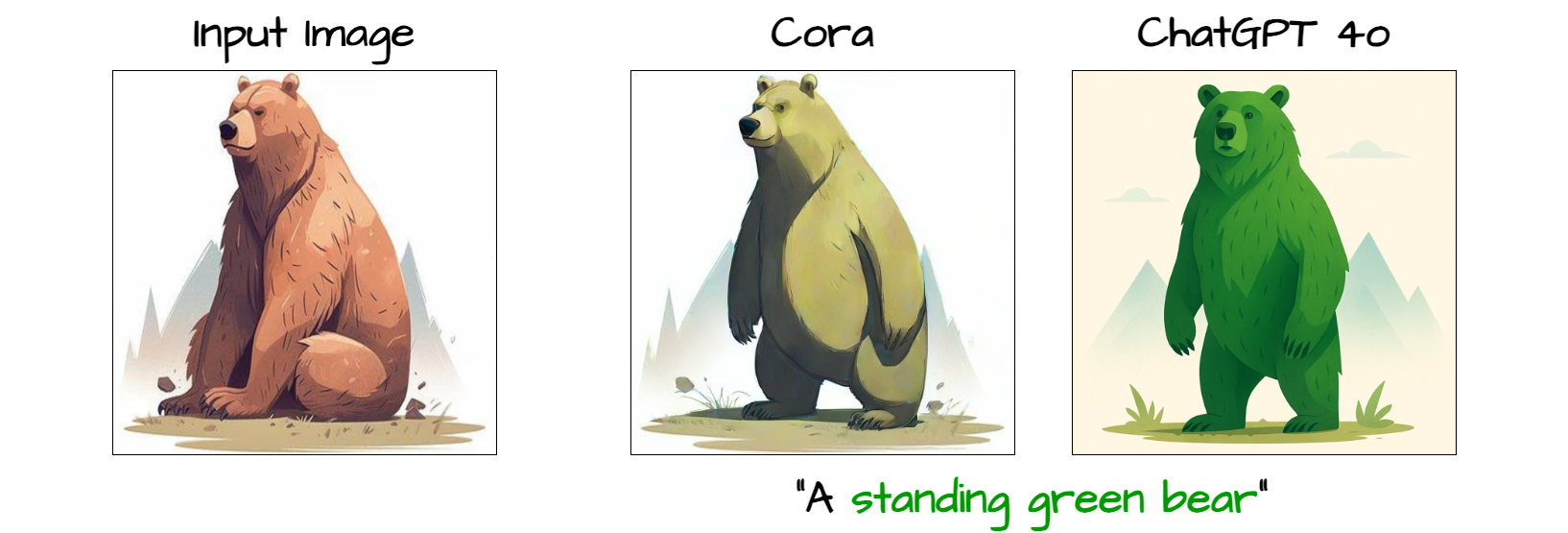

The audio track for this teaser video was generated with the help of Suno. TL;DRCora is a new image editing method that enables flexible and accurate edits-like pose changes, object insertions, and background swaps-using only 4 diffusion steps. Unlike other fast methods that create visual artifacts, Cora uses semantic correspondences between the original and edited image to preserve structure and appearance where needed. It works by:

It's fast, controllable, and produces high-quality edits with better fidelity than existing few-step methods. |

Click a thumbnail, then adjust α (Appearance alignment) and β (Structure alignment) from 0 (complete alignment with input image) to 1 (complete adherence to text prompt) to see the output.

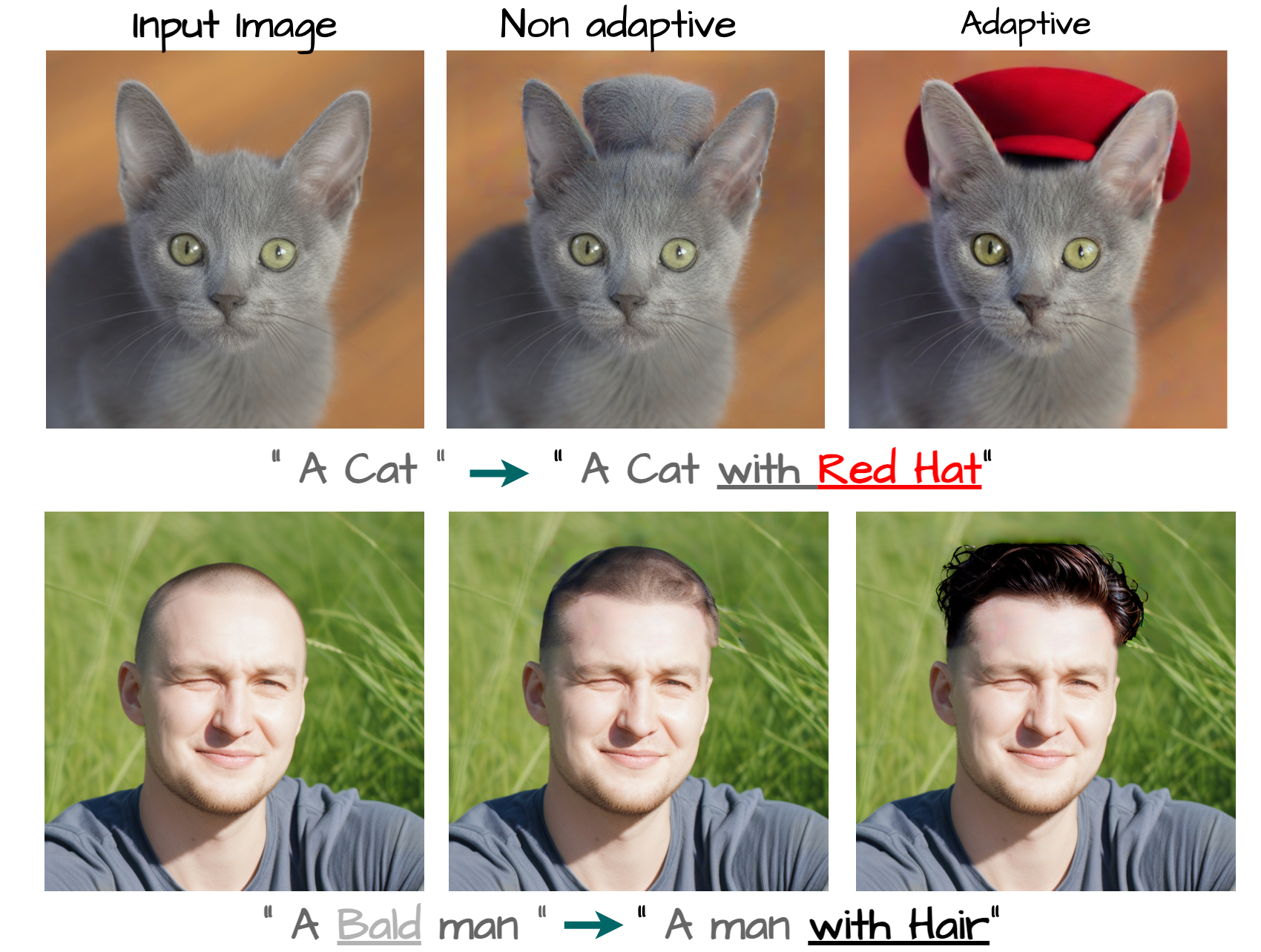

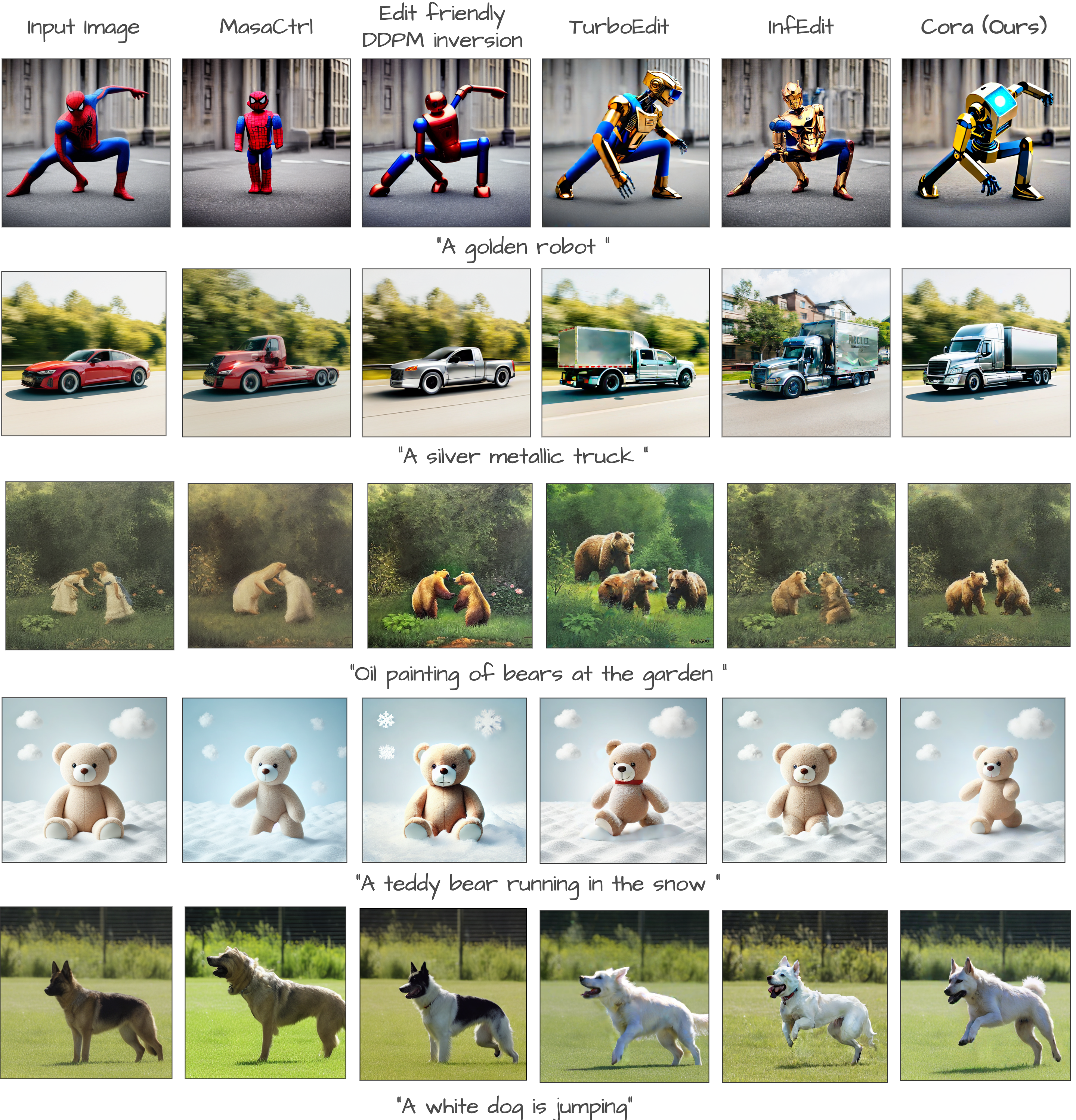

Image editing is an important task in computer graphics, vision, and VFX, with recent diffusion-based methods achieving fast and high-quality results. However, edits requiring significant structural changes, such as non-rigid deformations, object modifications, or content generation, remain challenging. Existing few step editing approaches produce artifacts such as irrelevant texture or struggle to preserve key attributes of the source image (e.g., pose). We introduce Cora, a novel editing framework that addresses these limitations by introducing correspondence-aware noise correction and interpolated attention maps. Our method aligns textures and structures between the source and target images through semantic correspondence, enabling accurate texture transfer while generating new content when necessary. Cora offers control over the balance between content generation and preservation. Extensive experiments demonstrate that, quantitatively and qualitatively, Cora excels in maintaining structure, textures, and identity across diverse edits, including pose changes, object addition, and texture refinements. User studies confirm that Cora delivers superior results, outperforming alternatives.

Click a thumbnail to see the editing prompt and interactively compare with and without CLC.

|

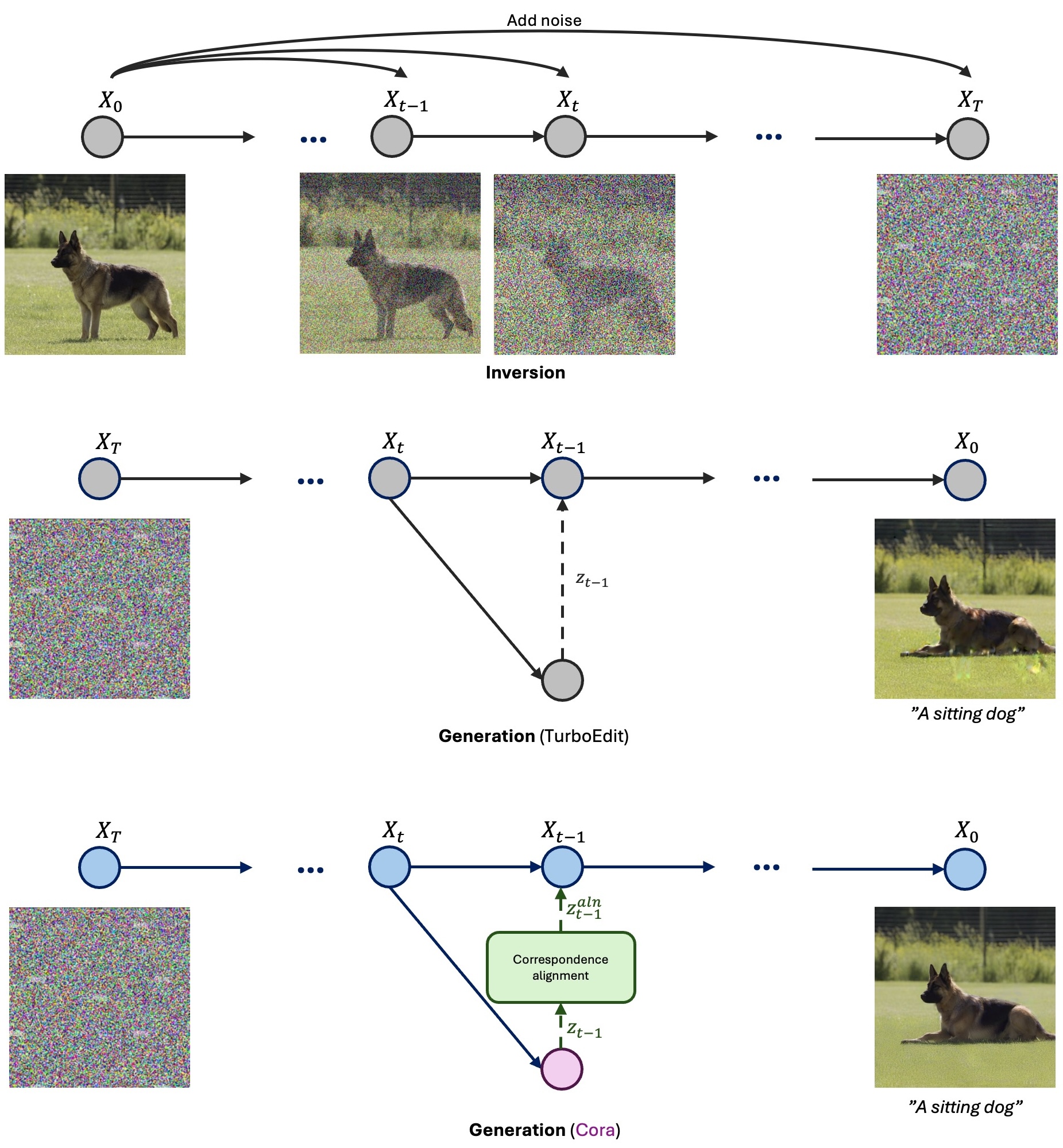

Image editing with diffusion models typically requires inversion: recovering the denoising trajectory from the input image X0 to noise XT. We build on Edit-Friendly DDPM inversion, where noise is added step-by-step using the forward scheduler to produce X1, X2, ..., XT.

During generation, it's not guaranteed that Xt will map back to Xt-1, so the method introduces a correction term z. This enables perfect reconstruction with the original prompt and meaningful edits with new prompts.

However, in few-step generation, directly using z leads to artifacts like ghosting and silhouette remnants, especially when the edit involves structural change. We identify the cause: the correction terms are spatially aligned with the original image, not the edited one.

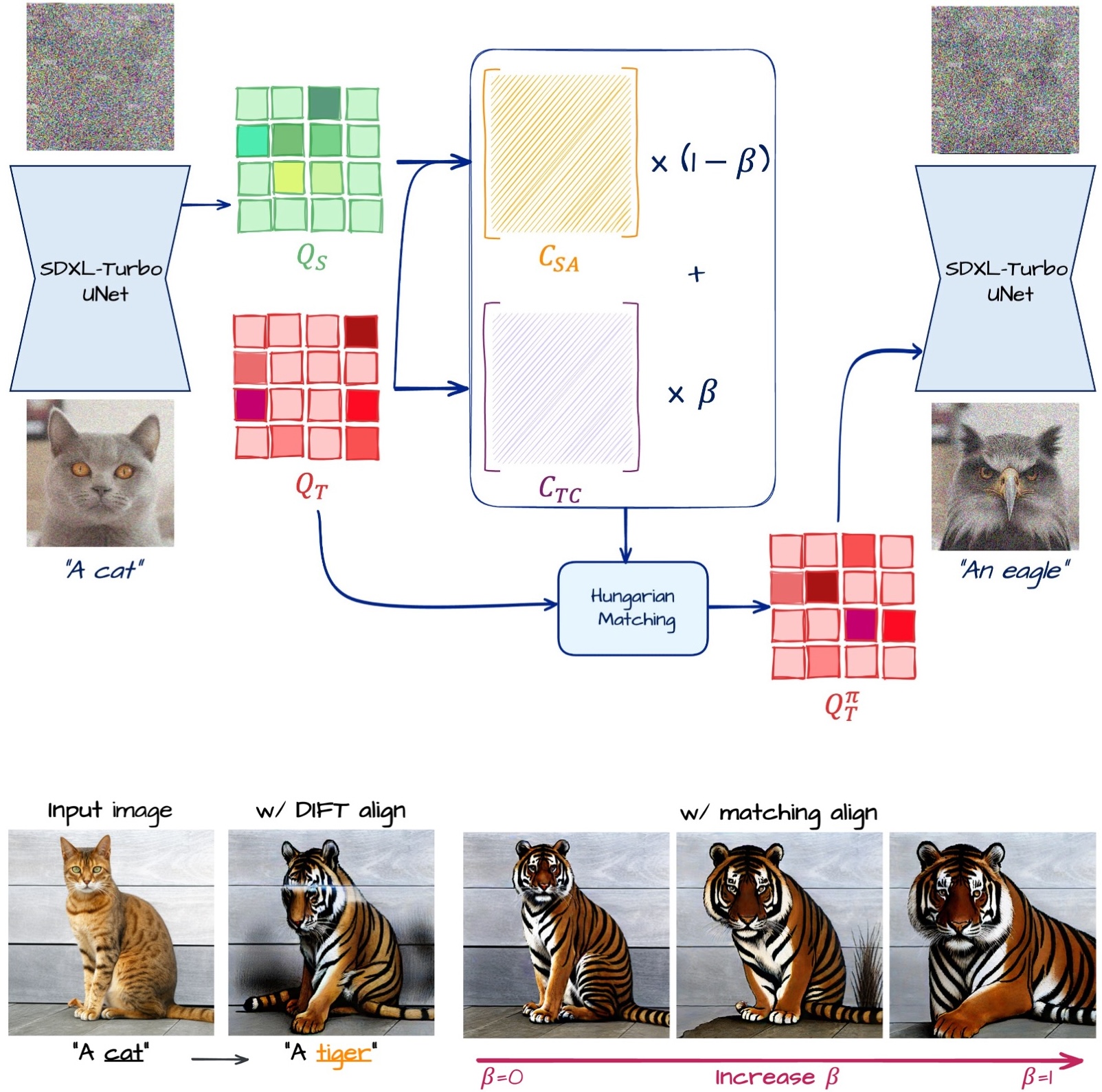

Cora addresses this by aligning z to the target content using pixel correspondences computed from diffusion features, enabling clean, structure-consistent edits even in few-step settings.

To preserve the structure of the input image, we align self-attention queries of the target with those of the input. Rather than direct pixel-wise alignment, we use one-to-one matching via the Hungarian algorithm, which effectively maintains structural consistency. |

|